I am using Gapminder dataset for the purpose of running Random Forest experiment

Data Management

In

cluster analysis variables with large values contribute more to the distance

calculations. Variables measured on different scales should be standardized so

that the solution is based on variables measured on larger scales. We

standardize the cluster variables to have a mean of 0 and SD of 1. The cluster variables are - income per person, internet use rate, female employment rate, armed force rate, employment rate, life expectancy, urban rate, suicide rate.

Cluster Analysis

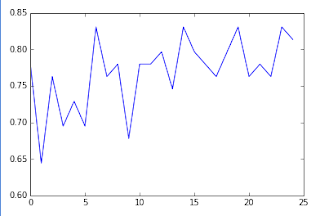

Initially

we do not know how many cluster are there in the given dataset. To begin our

analysis we calculate the average distance of the observations from the cluster

centroids. Then we plot this average distance measure to help us figure out how

many clusters may be optimal. This plot is the elbow curve shown above. The

average distance decreases as the number of cluster increases. Since the goal

of cluster analysis is to minimize the distance between observations and their

assigned clusters we want to choose the fewest numbers of clusters that provides

a low average distance. The bend in the elbow shows where the average distance

value might be leveling off such that adding more clusters doesn't decrease the

average distance as much. Closely examining the plot above we see that there are bends at 2 and 3 clusters. To

help us figure out which of the solutions is best we will further examine the

cluster solutions for at least two and three cluster solutions to see -

- whether they do not overlap

- whether the patterns of means on the clustering variables are unique and meaningful

- whether there are significant differences between the clusters on our external validation variable Polity Score

Canonical Discriminant Analysis

We

will plot the clusters in a scatter plot to see whether or not they overlap

with each other in terms of their locations in the p dimensional space. However

with 8 clustering variables, we will have 8 dimensions. This would be

impossible to visualize in a scatter plot. So we use the canonical discriminate

analysis. This is data reduction technique that uses smaller number of variables

that we linear combination of 8 cluster variables.

Usually, the majority of the variance in the clustering variables will be accounted for by the first

couple of canonical variables and those are the variables that we can plot. The cluster shows good

separation, the observations are spread out indicating less correlation among

the observations and higher within cluster variance.

Pattern of Means

Next

we can take a look at the pattern of means on the clustering variables for each

cluster to see whether they are distinct and meaningful.

From the above means analysis, we see that the third cluster has highest mean income per person, highest mean internet use rate and highest mean urban population. This is keeping with findings from my previous research assignments.

ANOVA - How the clusters differ on Polity Score

We'll use analysis of variance to test whether there are significant differences between clusters on the quantitative POLITYSCORE variable.

The R square and F statistic value from above show that the clusters differ significantly on PolityScore.

Code

# -*- coding: utf-8 -*-

"""

Created on Sun Feb 28 17:10:22 2016

@author: Abhishek

"""

from pandas import Series, DataFrame

import pandas as pd

import numpy as np

import matplotlib.pylab as plt

from sklearn.cross_validation import train_test_split

from sklearn import preprocessing

from sklearn.cluster import KMeans

"""

Data Management

"""

data = pd.read_csv("gapminder.csv")

data['polityscore'] = pd.to_numeric(data['polityscore'], errors='coerce')

data['incomeperperson'] = pd.to_numeric(data['incomeperperson'], errors='coerce')

data['employrate'] = pd.to_numeric(data['employrate'], errors='coerce')

data['femaleemployrate'] = pd.to_numeric(data['femaleemployrate'], errors='coerce')

data['lifeexpectancy'] = pd.to_numeric(data['lifeexpectancy'], errors='coerce')

data['armedforcesrate'] = pd.to_numeric(data['armedforcesrate'], errors='coerce')

data['internetuserate'] = pd.to_numeric(data['internetuserate'], errors='coerce')

data['urbanrate'] = pd.to_numeric(data['urbanrate'], errors='coerce')

data['suicideper100th'] = pd.to_numeric(data['suicideper100th'], errors='coerce')

#upper-case all DataFrame column names

data.columns = map(str.upper, data.columns)

# Data Management

data_clean = data.dropna()

# subset clustering variables

cluster = data_clean[[

'INCOMEPERPERSON',

'INTERNETUSERATE',

'FEMALEEMPLOYRATE',

'ARMEDFORCESRATE',

'EMPLOYRATE',

'LIFEEXPECTANCY',

'URBANRATE',

'SUICIDEPER100TH'

]]

cluster.describe()

# standardize clustering variables to have mean=0 and sd=1

clustervar=cluster.copy()

clustervar['INCOMEPERPERSON']=preprocessing.scale(clustervar['INCOMEPERPERSON'].astype('float64'))

clustervar['INTERNETUSERATE']=preprocessing.scale(clustervar['INTERNETUSERATE'].astype('float64'))

clustervar['FEMALEEMPLOYRATE']=preprocessing.scale(clustervar['FEMALEEMPLOYRATE'].astype('float64'))

clustervar['ARMEDFORCESRATE']=preprocessing.scale(clustervar['ARMEDFORCESRATE'].astype('float64'))

clustervar['EMPLOYRATE']=preprocessing.scale(clustervar['EMPLOYRATE'].astype('float64'))

clustervar['LIFEEXPECTANCY']=preprocessing.scale(clustervar['LIFEEXPECTANCY'].astype('float64'))

clustervar['URBANRATE']=preprocessing.scale(clustervar['URBANRATE'].astype('float64'))

clustervar['SUICIDEPER100TH']=preprocessing.scale(clustervar['SUICIDEPER100TH'].astype('float64'))

# split data into train and test sets

clus_train, clus_test = train_test_split(clustervar, test_size=.3, random_state=123)

# k-means cluster analysis for 1-9 clusters

from scipy.spatial.distance import cdist

clusters=range(1,10)

meandist=[]

for k in clusters:

model=KMeans(n_clusters=k)

model.fit(clus_train)

clusassign=model.predict(clus_train)

meandist.append(sum(np.min(cdist(clus_train, model.cluster_centers_, 'euclidean'), axis=1))

/ clus_train.shape[0])

"""

Plot average distance from observations from the cluster centroid

to use the Elbow Method to identify number of clusters to choose

"""

plt.plot(clusters, meandist)

plt.xlabel('Number of clusters')

plt.ylabel('Average distance')

plt.title('Selecting k with the Elbow Method')

# Interpret 3 cluster solution

model3=KMeans(n_clusters=3)

model3.fit(clus_train)

clusassign=model3.predict(clus_train)

# plot clusters

from sklearn.decomposition import PCA

pca_2 = PCA(2)

plot_columns = pca_2.fit_transform(clus_train)

plt.scatter(x=plot_columns[:,0], y=plot_columns[:,1], c=model3.labels_,)

plt.xlabel('Canonical variable 1')

plt.ylabel('Canonical variable 2')

plt.title('Scatterplot of Canonical Variables for 3 Clusters')

plt.show()

"""

BEGIN multiple steps to merge cluster assignment with clustering variables to examine

cluster variable means by cluster

"""

# create a unique identifier variable from the index for the

# cluster training data to merge with the cluster assignment variable

clus_train.reset_index(level=0, inplace=True)

# create a list that has the new index variable

cluslist=list(clus_train['index'])

# create a list of cluster assignments

labels=list(model3.labels_)

# combine index variable list with cluster assignment list into a dictionary

newlist=dict(zip(cluslist, labels))

# convert newlist dictionary to a dataframe

newclus=DataFrame.from_dict(newlist, orient='index')

newclus

# rename the cluster assignment column

newclus.columns = ['cluster']

# now do the same for the cluster assignment variable

# create a unique identifier variable from the index for the

# cluster assignment dataframe

# to merge with cluster training data

newclus.reset_index(level=0, inplace=True)

# merge the cluster assignment dataframe with the cluster training variable dataframe

# by the index variable

merged_train=pd.merge(clus_train, newclus, on='index')

merged_train.head(n=100)

# cluster frequencies

merged_train.cluster.value_counts()

"""

END multiple steps to merge cluster assignment with clustering variables to examine

cluster variable means by cluster

"""

# FINALLY calculate clustering variable means by cluster

clustergrp = merged_train.groupby('cluster').mean()

print ("Clustering variable means by cluster")

print(clustergrp)

# validate clusters in training data by examining cluster differences in polityscore using ANOVA

# first have to merge polityscore with clustering variables and cluster assignment data

polityscore_data=data_clean['POLITYSCORE']

# split polityscore data into train and test sets

polityscore_train, polityscore_test = train_test_split(polityscore_data, test_size=.3, random_state=123)

polityscore_train1=pd.DataFrame(polityscore_train)

polityscore_train1.reset_index(level=0, inplace=True)

merged_train_all=pd.merge(polityscore_train1, merged_train, on='index')

sub1 = merged_train_all[['POLITYSCORE', 'cluster']].dropna()

import statsmodels.formula.api as smf

import statsmodels.stats.multicomp as multi

gpamod = smf.ols(formula='POLITYSCORE ~ C(cluster)', data=sub1).fit()

print (gpamod.summary())

print ('means for POLITYSCORE by cluster')

m1= sub1.groupby('cluster').mean()

print (m1)

print ('standard deviations for POLITYSCORE by cluster')

m2= sub1.groupby('cluster').std()

print (m2)

mc1 = multi.MultiComparison(sub1['POLITYSCORE'], sub1['cluster'])

res1 = mc1.tukeyhsd()

print(res1.summary())