Same as Decision Trees assignment, I am using Gapminder dataset for the purpose of running Random Forest experiment

Data Management

Similar to previous assignment, I am using binary categorical response variable, ‘polityscore’ and a number of quantitative explanatory variables. The variable polity score ranges from -10 to 10. -10 to -5 is considered Autocratic. -5 to 5 is considered Anocratic. Countries falling in this range is usually politically neutral. 5 to 10 is Democratic. The variable is re-coded such that if a country is Autocratic or Democratic, then the value is 1. Otherwise the value is 0

The Random Forest

The code (last section of the post) is similar to decision tree experiment from previous assignment. The Random Forest Classifier is initialized with 25 estimators. That is, the random forest will be comprised of 25 trees

Shape of training and test samples

Correct and incorrect classification matrix

The accuracy of the model came up as 0.830508474576. Approximately 83% of the sample of countries was classified correctly as having a political bias or not having a political bias.

Measured Importance of Explanatory Variables (Features)

Measured importance of explanatory variables or Features

From the above

figures, variable with highest importance score: Internet Use Rate with score

of 0.19749606 and variable with lowest importance score: Employment Rate with

score of 0.10237368.

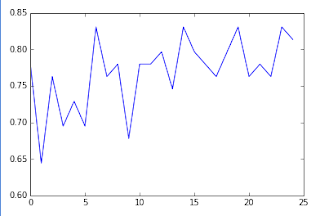

Correct classification rate for different number

of trees

We saw above that

accuracy of the classification was predicted as 83%. We have used 25 trees in

our random forest experiment to achieve this. The figure below shows the

accuracy score plotted against number of trees. The figure gives us an idea is

25 trees were indeed needed to achieve the accuracy score of 83

From the above plot

we see that for 6, 14, 18 and 23 accuracy can be actually higher than our

chosen number, which is 25.

Code

No comments:

Post a Comment